From handling a high number of studies and heterogeneity to conducting a meaningful synthesis

Author: Amir Mohsenpour, MSc Public Health Student, LSHTM.

The third of the four-part seminar series exploring evidence synthesis took place on July 28th. This time, we were excited to welcome Dr. Silvia Maritano (University of Turin, Italy) and Laurence Blanchard (LSHTM), who is one of the co-organisers of the synthesis series herself, to speak on an ever-important topic in evidence synthesis: How to best handle high number of studies and heterogeneity therein. Questions guiding their presentations revolved around how to best design and conduct systematic reviews and how to synthesise and present results in a way to prevent not seeing the wood for the trees.

Using examples from their current research projects, Silvia and Laurence identified and illustrated two matters of relevance: First, start with the end in mind. And second, how to deal with “messy” and multi-component reviews.

Part 1: Start with the end in mind

As discussed in other seminars already, a successful systematic review stands and falls with a thoughtful and clearly formulated review protocol and with consistent application of pre-decided questions of design.

Given the immensely high numbers of articles being published every day, a systematic review trying to synthesise evidence across all searchable references needs to be conducted with a sharply defined and consistently applied review protocol, including pre-determined inclusion and exclusion criteria. Only when knowing and fully understanding what research questions one is trying to answer for which population under which contexts, is one able to effectively and efficiently work their way through thousands of references.

As known to the audience of CfE and synthesis series already, the PICO acronym (population, intervention, comparison and outcome) can be a useful way to internally discuss or externally present one’s research question. But a number of other useful acronyms, e.g. SPIDER, exist for different types of research questions.

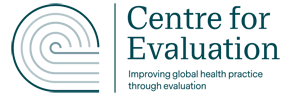

Dr. Silvia Maritano also introduced the helpful concept of lumpers vs. splitters in building one’s research questions (see figure 1), using her current research on child-related impacts of income support policies. Lumpers broaden up the research question, e.g. by increasing the range of outcomes of interest, and as such may potentially increase the external validity (transferability to a larger more general population/context) of one’s findings and may be of more interest to policy makers. Contrastingly, splitters help specify and restrict the research question in a way that e.g. only a very specific, single intervention is being assessed and explored. This may reduce the external validity, but as such increases the internal validity and helps better understand the outcome of interest for this particular population under this specific context.

Of course, even the best written and prepared review protocols may be confronted with new aspects and questions unknown before. It is well advised to discuss these issues with the full team before deciding on any change. More importantly, all changes decided upon need to be transparently noted in the review protocol. Conducting a systematic review with the above points in mind, helps to ensure quality and transparency in evidence review and synthesis.

When confronted with a large number of identified and suitable references, a final step is of utmost importance in any systematic review: the critical appraisal of research quality. Different study designs and analysis methodologies have different strengths and weaknesses. As such, evidence generated may have different properties, e.g. regarding statistical inference (risk of false conclusions due to small sample sizes), internal validity (risk of systematic bias in findings) or external validity (i.e. generalisability to other contexts and population groups).

Such thorough critical and systematic appraisal helps to distinguish those studies with high certainty from those with low certainty in their results. This supports in synthesising individual high-quality pieces of evidence into a larger picture with better understanding of the research topic. Nevertheless, if the identified references are of purely low-quality one should not be afraid to call out the lack of high-quality research to fully answer the question focus.

How to deal with large-scale research questions which may lead to a massive amount of identified and yet heterogeneous references was the centre of the second part of the seminar.

Part 2: Dealing with “messy“ evidence syntheses

Conducting systematic reviews on policy-related topics can get “messy“ quite fast. Laurence Blanchard started her presentation by offering a first introduction to this concept of “messy”: This can be an issue when reviewing evidence on a broad topic and a broad range of types of evidence, methods and outcomes, synthesising such heterogeneous components can be hard and can even result in faulty results.

As such, and similarly to the concept of lumpers and splitters, synthesising result components which should not have not been combined decreases internal validity and may result in biased findings. On the other hand, too little synthesis and too much singular reporting may offer little value to readers due to a very low external validity, as findings may only be applicable to very particular research contexts.

To better illustrate challenges and solutions, Laurence presented their current evidence syntheses on the governance of diet-related policies. Such policies can be varied by nature, ranging from mandatory interventions by the public sector to voluntary mechanisms, e.g. industry self-regulation, or public-private partnerships in-between. Such “messy“ reviews benefit the most from a robust theoretical understanding of the vast mechanisms of change and contexts under which effects are hypothesised to take place. This was needed to be able to correctly screen through the 27,930 references their searches resulted in.

As with such “messy“ reviews, the researchers’ understanding on the topic develops over time and concepts and design decisions need to be adapted - such “messy“ evidence synthesis can be a largely iterative approach. Using screening software, like EPPI-Reviewer, can be of large benefit and facilitate the screening and synthesis stages.

One of many helpful tips and tricks is to directly code reasons of exclusion when screening references. This may need a lot of time but enables the researchers to later come back and adapt their coding easily when criteria are changed retrospectively.

Additionally, when confronted with a high number of studies to be included, stratification of the references by their level of evidence quality can be a reasonable and important way to increase internal validity and display how results change when including lower-level evidence quality as sensitivity analyses. Alternatively or additionally, such stratification can be done by other factors, e.g. the research contexts or countries.

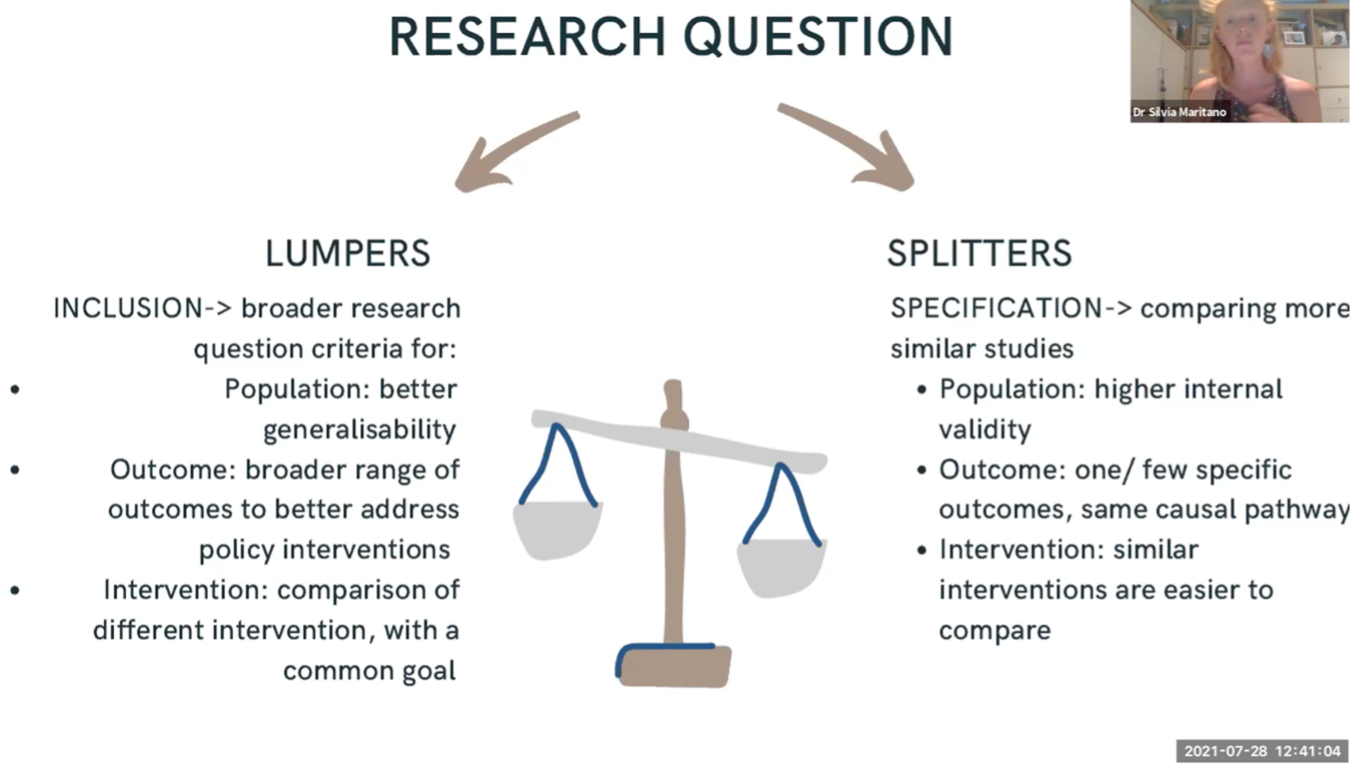

Laurence introduced the audience to another interesting approach based on the “value of information“: Starting from the findings based on the high-quality studies, one can assess how much value the inclusion of the next level lower-quality study would add. In broad terms, value here is defined as the expected marginal gain in certainty compared to the level of uncertainty currently existing in answering the research question (1; see figure 2). With this format, working one’s way through lower-quality studies from most to least recent, one can find the threshold where a quasi-saturation in certainty is reached.

Finally, in every review, but with special emphasis on “messy“ reviews, it is important to visualise research results and describe the body of evidence found. This way one can ensure that all readers are able to get a quick grasp of the review results, e.g. from which countries the body of evidence is mainly from or what components within the research topic have been researched and which have not. Another useful visualisation can be in the form of an evidence map, which offers lots of information in little space (see figure 3).

You can watch the recording of the session here.

References

(1) Minelli C, Baio G. Value of Information: A Tool to Improve Research Prioritization and Reduce Waste. PLoS Med. 2015 Sep 29;12(9):e1001882. doi: 10.1371/journal.pmed.1001882.

LSHTM's short courses provide opportunities to study specialised topics across a broad range of public and global health fields. From AMR to vaccines, travel medicine to clinical trials, and modelling to malaria, refresh your skills and join one of our short courses today.